Optimizing the Redis Connection Pool

Sam Farid

CTO/Founder

TL;DR: Managing your (Redis) connection pool is a risky, time-intensive process that requires technical knowledge and production experimentation. We offer some advice and heuristics for tuning your connection pool, and share some aggregated data from Flightcrew.

Redis, a popular caching layer, is treated with caution because of its centrality; small issues often cascade into larger ones. At the same time, adding more resources to Redis can compromise reliability, and will obviously increase cloud costs.

While at Google Cloud, I helped many top-tier GCP customers detect and resolve Redis incidents. Now at Flightcrew, we’re building a config copilot that has a good sense of ‘magic numbers’ for Redis, and other services. Here’s some advice, heuristics and aggregated data for tuning the Redis Connection Pool:

What is a connection pool?

A connection pool tracks and reuses existing connections instead of opening new ones each time your application wants to speak to Redis. Storage solutions like Redis have a limited number of connections that they can accept at any given time, and it’s inefficient and slow to keep closing and recreating these connections. Using a connection pool is a best practice at any scale.

What configs do I need to track?

Redis’s client libraries for connection pools have many, many different configuration settings. For example, the top Go library (go-redis) has at least 15. The key settings to pay attention to are usually:

- Max Connections: Total connections available to the pool. Lower values can lead to network bottlenecks, while higher values can lead to memory overflows.

- Idle Timeout: How long to wait before closing an inactive connection - this keeps your applications from wasting unused memory.

- Retry Backoff: Specifies how long to wait before retrying failed connections. High values can lead to slow performance, and low values are the easiest way to DOS yourself while new and retrying traffic all blast Redis at the same time.

Infrastructure platform configs are equally important to the performance of your application and how it interacts with Redis, especially if you’ve enabled autoscaling on your platform. The key autoscaling configs are usually:

- Min/Max Replicas: tells the autoscaler the range of how many replicas to provision.

- Resource Requests: how much CPU and Memory are allocated to each replica.

- Target Utilization: defines a percentage of resource utilization per replica before autoscaler adds another one to handle the extra load.

When should I update these configs?

In reactive scenarios, you should take a look if:

- Redis is down (duh)

- You’re seeing vague “connection reset” error logs on your services

- Spiky latency graphs that can be attributed to interactions with Redis

On the other hand if you’re being proactive you should check if:

- You are scale planning for a big infrastructure change, such as adding autoscaling or deploying new services where Redis consumption is expected to increase

- Utilization of CPU or memory is consistently low on Redis, you might be overprovisioned and overpaying

- The default settings haven’t been revisited in months, while production workloads or traffic have changed

Defining Success

Your goal is to optimize connection settings to maximize availability and performance, while minimizing cost.

Your challenge:

- You’ll need to touch interdependent application and infrastructure configs that are often owned by different teams (e.g., maxconnections and maxinstances)

- High-dimensionality configuration plus dynamic traffic loads means that the truly optimal configuration is a moving target hour by hour.

- Autoscaling means an even more dynamic environment where the ground shifts under your feet.

- When a core infrastructure component such as Redis fails, the failures will most certainly cascade into other parts of the system.

How to tune it

Most teams will use a mix of experimentation, rules of thumb, and metrics to keep the connection pool balanced. If this is your first time, expect to spend at least a week building an intuition and getting it right. And unfortunately, a lot of this can only be learned for sure in production environments, so you have to be okay with an inherent risk to production in order to fix them.

Before you start:

- Pull connection pool configs into environment variables so that you can rapidly iterate.

- Set up monitoring for each service, and Redis itself. Take a snapshot of each metric as the baseline.

- If possible, start with a staging environment to get a few safe hypotheses for your production environment. Ideally there is load testing in the staging environment to match prod as closely as possible, including code versions and traffic patterns.

To run experiments:

- Vary one config at a time, try it out in a staging environment and match the observed metrics against the baseline.

- Run each configuration for at least a half hour and ideally longer, as errors may not show up right away.

- Note down whether the config had a positive or negative affect on each metric, and repeat the process varying another value. Although there may be complex interactions between configs and non-linear effects, it’s a good proxy to build up your intuition for each config’s effect.

- Then, start testing the most reliable hypotheses in production environments.

This process looks similar to hyperparameter tuning in ML, so a tool like Ax can help automate the process at scale. But you’ll still want to build an intuition behind the results, since this isn’t practical during an incident.

Aggregated data and best practices

Flightcrew is a config copilot that can estimate ‘magic numbers’ for cloud configs, so we’ve got a sense of how to tune connection pool configs across production workloads. While experimenting, keep an eye out for some of the intuitive and surprising patterns we’re seeing in our data:

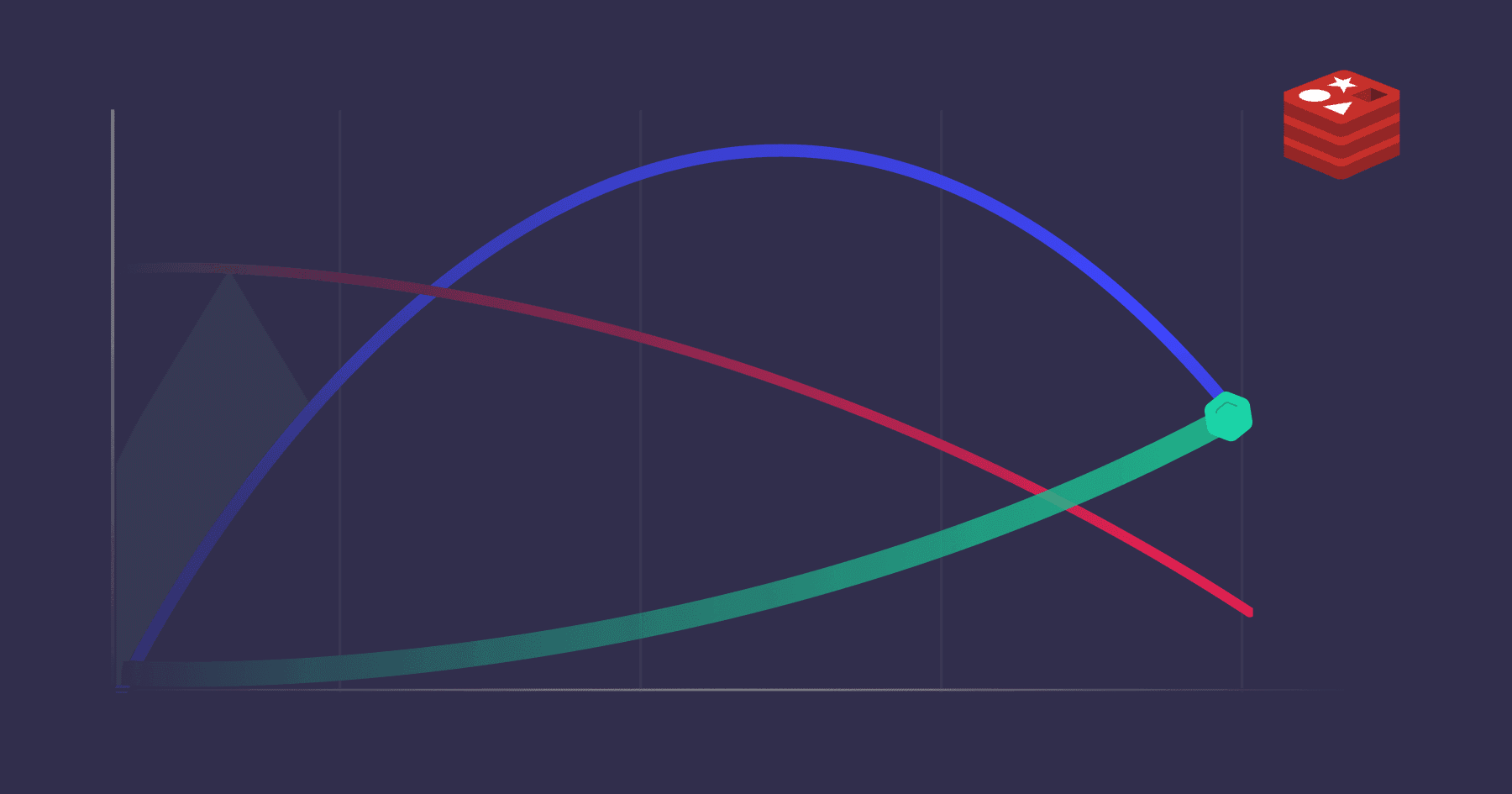

- Decrease the number of connections per replica as the number of replicas go up, to stay under the maximum number of connections Redis can handle. These should also be paired with shrinking resources per replica to avoid unnecessary costs.

- The target resource utilization per replica should increase with the number of replicas, to aim for smaller replicas that run hotter, which will be more conservative about opening precious connections when getting close to the knife’s edge.

- Retry backoff intervals should increase as more connections are used, to avoid further overloading the poor server when something first goes wrong. Idle timeouts should be low at small scales because it’s best to reap unused connections and free up resources when connections are not often reused.

- The timeout should then increase with scale to enforce stricter reuse of existing connections. However at maximum scale, it’s best to lower it again because any hanging connection is a waste and should be reaped quickly, especially when replicas are scaling down from peak traffic.

Some global advice when tuning configs:

- Application and infrastructure configs are deeply intertwined, so don’t take a siloed perspective when settings need to be changed.

- Theoretically, the most optimized solution would be to adjust settings constantly throughout the day and on weekends, adjusting for varying traffic. - This is impossible for a human and shouldn’t be the goal.

- Assuming a fixed configuration that prioritizes availability over cost, you should generally set values that are optimized for the maximum scale, and this will work well at different loads.

- In general, running more, smaller replicas will help get the most out of autoscaling. More instances means better availability, and smaller instances means there are smaller step changes during traffic cycles, and therefore less wasted money.

- All settings eventually get stale. Leave comments in .yaml files and document experiments for your next tuning session, or when it’s someone else’s turn.

And that’s it! Good luck out there, and feel free to reach out to hello@flightcrew.io with any questions or to find out how Flightcrew can do this for you.

Sam Farid

CTO/Founder

Before founding Flightcrew, Sam was a tech lead at Google, ensuring the integrity of YouTube viewcount and then advancing network throughput and isolation at Google Cloud Serverless. A Dartmouth College graduate, he began his career at Index (acquired by Stripe), where he wrote foundational infrastructure code that still powers Stripe servers. Find him on Bluesky or holosam.dev.